The 8th Pro²Future Partner Conference is now behind us, and it was a particularly significant event as it focused on the future of the center.

This year, we had the honor of hosting more than 70 participants from leading industrial companies and academic institutions, both from Austria and abroad such as Technical University of Munich, University of Leoben, Johannes Kepler University Linz, Graz University of Technology, AVL, Fronius, ANTEMO, Four Panels, WK STMK, RoboCup, Voestalpine, Platform Industrie 4.0, Siemens, Böhler, Land Steiermark, Primetals, SFG, UAR, DIH Süd, EWW, Land OÖ, DewineLabs, Silicon Alps…

To kick off the conference, Univ.-Prof. Dr. Alois Ferscha delivered a presentation titled “Pro²Future Epoch II,” in which he elaborated on the center’s position and its vision for the coming years. Following that, our current area managers shared insights into what we can anticipate from their respective areas in the future.

Just before the first break, we had the privilege of hearing a keynote titled: “Cohabitation, Cooperation and Complementation of Humans and Robots in the Factories of the Future” by Prof. Dr. Achim Lilienthal, Professor of Perception for Intelligent Systems at TU Munich.

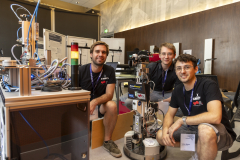

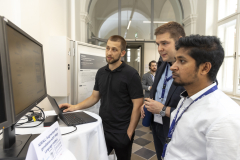

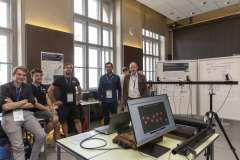

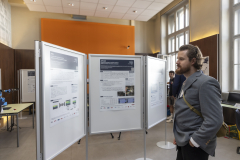

After the break, our CEO, DI Gerd Hribernig, provided an overview of the center, its progress, achievements, and future plans. Throughout the conference, we showcased 13 demonstrators accompanied with 13 posters, highlighting our latest research accomplishments and our unwavering commitment to helping our industrial partners improve their industrial environment.

The list of demonstrators with their brief descriptions is provided below.

Area 1 Perception and Aware Systems

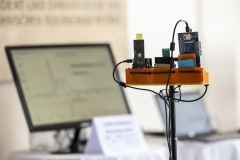

Factory-Scale Air Quality Sensing: This system utilizes AI-based technology to enhance occupational safety in a large-scale polymer production environment. Embedded systems monitor air quality, issuing real-time warnings to workers when hazards arise. Data is transmitted to the Cloud for immediate monitoring and analysis.

Human <> Drone Interaction Study: Drones, serving as AI-powered cobots, rely on pleasant interactions. They use eye contact as acknowledgment, approaching users only after establishing eye contact. A ground vehicle receives and monitors the drone’s video feed using deep neural networks to track interactions.

Area 2 Cognitive Robotics and Shop Floors

Cognitive Engineering Process Support: This collaborative engineering approach supports engineers and process modelers by enhancing artifact traceability and navigation, enabling finer-grained artifact properties in process modeling, accommodating temporal process constraints, detecting and rectifying real-time process deviations, and delivering customized, real-time process guidance for engineers.

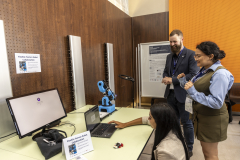

Flexible Human Robot Collaboration: In this use-case, we repurpose human-machine collaborations for flexible manufacturing systems. We enhance robot environment perception with diverse sensory inputs and ML models, while also tracking the assembly process to synchronize human-robot collaborations, fostering flexibility for human workers.

Area 3 Cognitive Decision Making

MANDALA: Multivariate ANomaly Detection & exploration: MANDALA is a platform for identifying and analyzing anomalies in multivariate time series data. It allows easy exploration and assessment of anomaly candidates, dimensions, and temporal aspects in complex data. With a user-friendly interface, MANDALA simplifies the handling of large, high-dimensional sensor data.

Visual Analytics for Production Systems: This project offers interactive visualization of production parameters, color-coding defects by type. The dashboard provides an overview with zoom-in capabilities for detailed analysis, aiding in identifying potential defect causes within production parameters. Filters allow for specific data insights, while customizable tooltips and a heatmap reveal additional information and local parameter fluctuations.

AERIALL: Augmented tExt geneRation wIth lArge Language modeLs: AERIALL is a web-based application that utilizes a locally hosted, pretrained large language model. It enables the uploading of domain-specific documents to expand the model’s knowledge base for precise answers to tailored questions in various domains. The platform prioritizes security and privacy.

VEEDASZ: Visual Exploration of Engineering Data with Analytical Semantic Zoom: VEEDASZ allows domain experts to explore multiple time series in a map view. It abstracts data at a low zoom level, providing essential attributes, while zooming in reveals detailed time series. Furthermore, it offers a powerful lensing feature for grouping and visualizing data similarities and dissimilarities.

Area 4.1 Cognitive Products

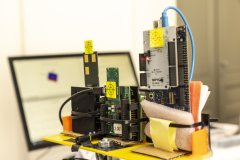

UWB Distance Demonstrator: The Ultra-WideBand (UWB) demonstrator highlights indoor wireless distance measurement with robust UWB technology, ensuring accuracy in any setting. Using three UWB modules, it collects real-time data via WLAN for live display, demonstrating precise measurements in both Line of Sight (LoS) and Non-Line of Sight (NLoS) scenarios. Additionally, our research on TinyAI has identified ML models and methods to address NLOS challenges.

Cognitive Monitoring System: The CORVETTE applications are designed for concurrent monitoring of various vehicle parameters on a single, robust in-vehicle edge device. The Cognitive Monitoring System includes algorithms for weather and tunnel detection, object and vehicle detection with traffic density estimation, real-time digit recognition for speed and gear identification, and anomaly detection in vehicle movements. Demonstrated during AVL vehicle testing, it showcases practical applicability in real-world scenarios.

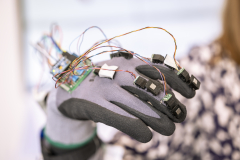

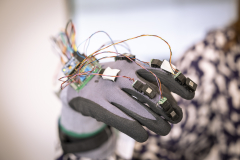

Hand Gesture Demonstrator: The Hand Gesture Demonstrator creates a digital hand twin for applications like hand-tracking, action recognition, and remote machinery operation. Equipped with cost-effective sensors, it provides accurate data, including hand positioning using UWB technology, finger movements, and object interactions. This demonstrator showcases our research in TinyAI, Machine Learning (ML), and advanced UWB technology.

Area 4.2 Cognitive Production Systems

Axial Torque Measurement: We developed an innovative approach for controlling and determining power demand in polymer processing, successfully implementing a proof of concept for the laser deflection method. This involved attaching multiple mirrors to the barrel, allowing for axial deformation measurement with a resolution of up to several micro-radians. Notably, these results have been patented as No. 20-P-009 AT.

P2D2: Power Processing for Defect Detection: End-of-line testing is a common quality-checking method, but it can lead to disassembly and rework. In contrast, P2D2 detects defects during part manufacturing by analyzing machining data, eliminating the need for extra sensors. This offers an advantage in defect detection and quantification during part production.

Fotos: Lunghammer